In recent months, reports have surfaced that Israel is deploying artificial intelligence not only on the battlefield in Gaza but also online, where the goal is to influence public opinion through social media bots spreading disinformation.

Israel has a long history of using social media for propaganda, whether by working with tech companies to flag pro-Palestine content or by mobilizing troll armies to support its narrative. With the advent of AI, Israel has found new methods to shape public opinion in its favor, employing AI-driven social media accounts to “debunk” allegations against the state.

Nour Naim, a Palestinian AI researcher, has observed this shift firsthand. “From day one, I have encountered a troubling number of fake accounts disseminating Israeli propaganda,” Naim told MintPress News. “These accounts, functioning as digital trolls, systematically attempt to either discredit well-documented evidence of the genocide in Gaza—backed by audio and video proof—or distort the facts altogether.”

Israel’s AI Troll Army

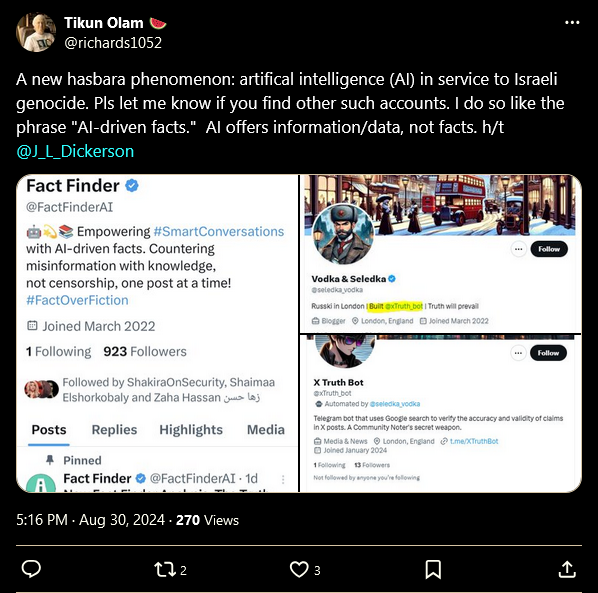

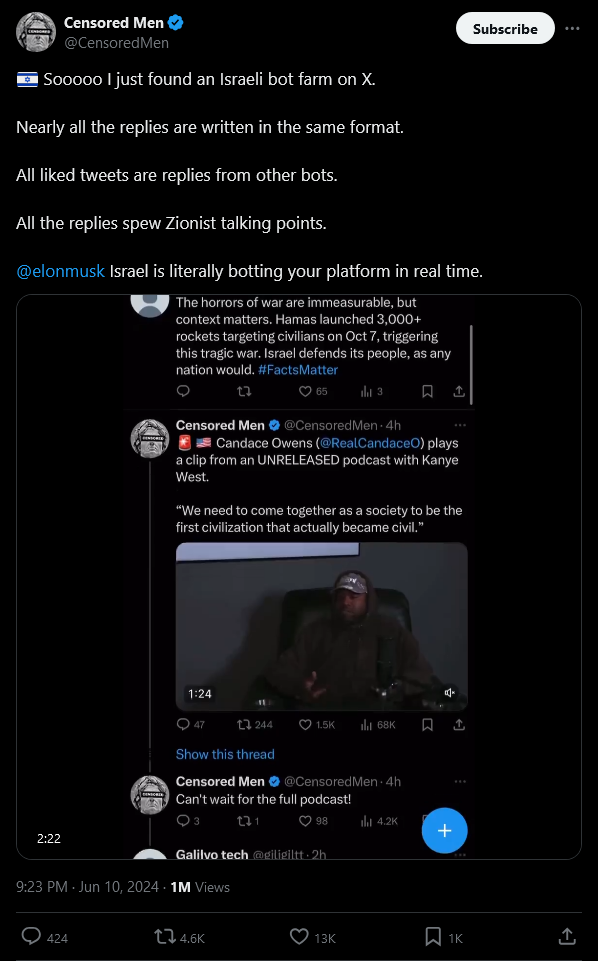

Israel has long used social media to manage its image with great effect. It collaborates with tech firms to flag pro-Palestinian content and employs coordinated online groups to quell online criticism. But now, AI offers new tools for influence. MintPress News has identified several accounts operating under names like Fact Finder (@FactFinder_AI), X Truth Bot (@XTruth_bot and @xTruth_zzz), Europe Invasion (@EuropeInvasionn), Robin (@Robiiin_Hoodx), Eli Afriat (@EliAfriatISR), AMIRAN (@Amiran_Zizovi), and Adel Mnasa (@AdelMnasa96892). Each account appears designed to “debunk” criticisms and circulate a streamlined pro-Israel perspective.

These people aren’t real. Ai israel bots.

I got banned for a week when I made this list btw and I’ve been shadowbanned ever since pic.twitter.com/ahzHvQMcmk— PoliticallyApatheticBozo (@the1whosright) September 10, 2024

The first three accounts do not mention Israel in their bios, but most of their posts exhibit clear signs of pro-Israel propaganda.

Fact Finder’s bio states: “Empowering #SmartConversations with AI-driven facts. Countering misinformation with knowledge, not censorship, one post at a time! #FactOverFiction.”

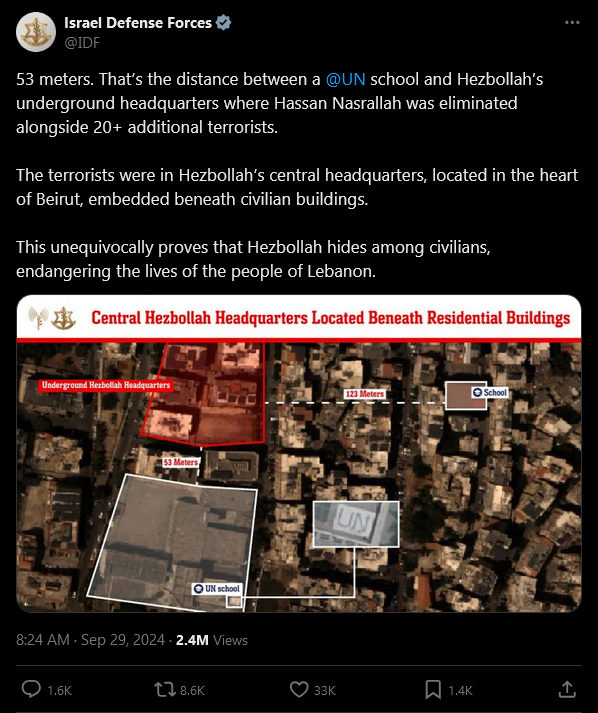

The account’s posts aim to debunk information portraying Israel negatively. This is done by replying to news articles or pro-Palestinian voices or by creating posts purportedly refuting reports on Israel’s killings of Palestinian civilians and aid workers, as well as other acts of state violence. Yet these so-called clarifications read like they’re straight from a military spokesperson’s transcript—often justifying attacks as the elimination of terrorists.

The X Truth Bot operates under two profiles: @XTruth_bot, established in August 2024, and @xTruth_zzz, created in January 2024. Both accounts are managed by the user Vodka & Seledka (@seledka_vodka), a British-Russian blogger. They promote a Telegram bot that claims to utilize Google search for verifying statements made on X, which Vodka & Seledka asserts to have developed. Notably, Vodka & Seledka follows several pro-Israel accounts and frequently shares Zionist content.

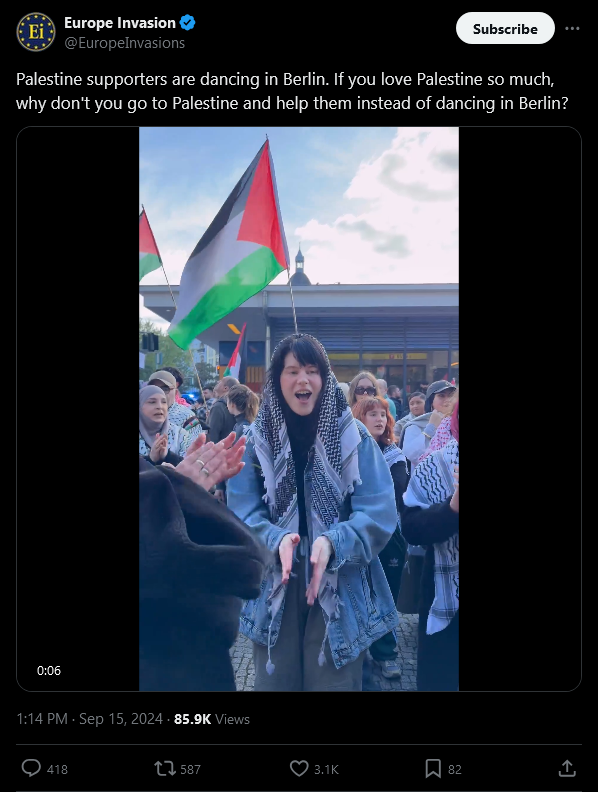

Europe Invasion focuses mainly on xenophobic content targeting immigrants, particularly Muslims. The account frequently draws connections between pro-Palestine protesters and Hamas supporters, equating the two in its posts.

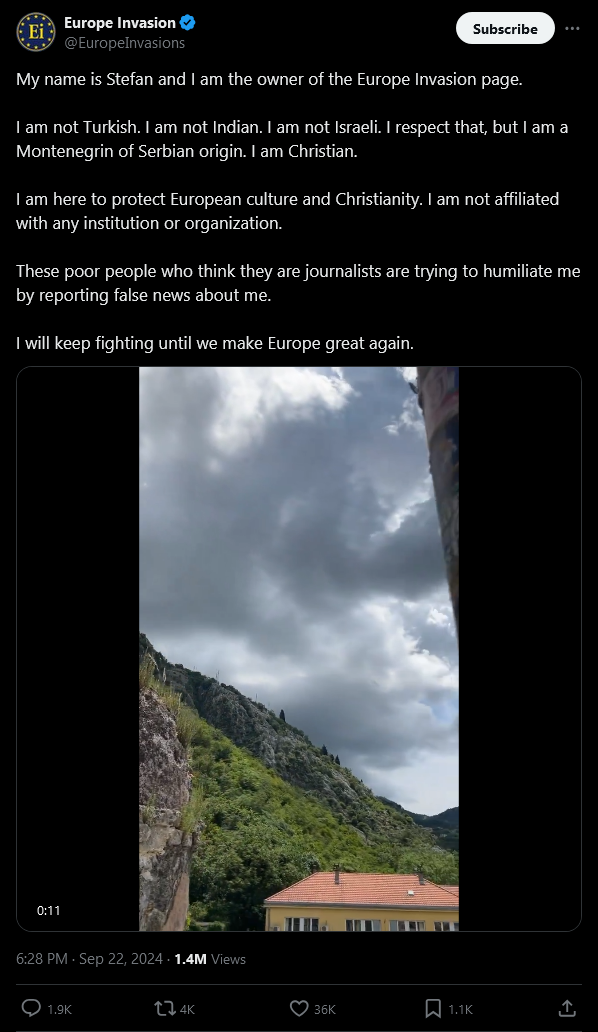

Recent investigations have uncovered that the X account « Europe Invasion, » known for disseminating xenophobic content targeting Muslim immigrants and equating pro-Palestinian protesters with Hamas supporters, is likely operated by a Turkish entrepreneurial couple based in Dubai. Swedish news outlet SVT reported that this couple is also connected to the X account « Algorithm Coach » (@algorithmcoachX). When approached, the couple denied direct involvement, stating they had engaged an advertising agency to promote their business. Both accounts have undergone multiple rebrandings; notably, « Europe Invasion » was previously a cryptocurrency account under the username @makcanerkripto and has a bot score of 4 out of 5, according to Botometer.

Following SVT’s investigation, Europe Invasion updated its profile, claiming to be managed by « Stefan, » a Montenegrin of Serbian origin. However, SVT reported that when they contacted the advertising agency linked to the Turkish couple allegedly behind the account, a person named Stefan responded — with an email account set to Turkish.

The next three accounts on the list—Robin (@Robiiin_Hoodx), Eli Afriat (@EliAfriatISR), and AMIRAN (@Amiran_Zizovi)—do explicitly reference Israel or Zionism in their Bios.

The next three accounts on the list—Robin (@Robiiin_Hoodx), Eli Afriat (@EliAfriatISR), and AMIRAN (@Amiran_Zizovi)—do explicitly reference Israel or Zionism in their Bios.

Robin, who joined X in May 2024, describes himself in his bio as: “• Think of nothing but fighting • PROUD JEWISH & PROUD ZIONIST.” Two of these accounts use AI-generated profile pictures of Israeli soldiers. They frequently interact with each other, replying to and reposting each other’s content, and follow various pro-Israel accounts.

Other accounts, including Mara Weiner (@MaraWeiner123), Sonny (@SONNY13432EEDW), Jack Carbon (@JaCar97642), Allison Wolpoff (@AllisonW90557), and Emily Weinberg (@EmilyWeinb23001), display bot-like behavior. Their usernames include random numbers, and they either lack a profile picture or use vague images. Most of these accounts were created after October 2023, coinciding with the start of Israel’s military actions in Gaza. They predominantly respond to pro-Palestine posts by promoting Zionist talking points.

Lebanese researchers Ralph Baydoun and Michel Semaan, from the communications consulting firm InflueAnswers, describe “superbots” as Israel’s new secret weapon in the digital information war over its operations in Gaza.

Earlier versions of online bots were far more rudimentary, with limited language abilities, and were able only to respond to predetermined commands. “Online bots before, especially in the mid-2010s … were mostly regurgitating the same text over and over and over. The text … would very obviously be written by a bot,” Semaan told Al Jazeera.

On the other hand, AI-powered super bots that utilize large language models (LLMs)—algorithms trained on vast amounts of text data—can produce smarter, faster, and, most critically, more human-like responses. Examples of these LLMs include ChatGPT and Google’s Gemini.

These AI bots have not only been covertly deployed on social media; pro-Israel activists have also assembled AI-powered troll armies to bolster their messaging.

In October 2023, as Israel launched its military campaign in Gaza, Zachary Bamberger, a graduate student at Israel’s Technion University, created an LLM specifically designed to counter what he saw as “anti-Israel” content and amplify pro-Israel posts across online platforms.

Bamberger’s company, Rhetoric AI, is designed to generate translations of Arabic and Hebrew social media content, assess whether posts violate platform terms of service, and swiftly report any infractions. For posts that don’t violate terms, the tool generates what it considers the most effective counter-argument.

“When deployed on a larger scale, our platform will shift the balance of content and take away the numbers advantage of those who propagate hate, violence, and lies,” Bamberger told the American Technion Society.

To develop Rhetoric AI, Bamberger enlisted the help of 40 doctoral students, and the company now collaborates with tech giants Google and Microsoft.

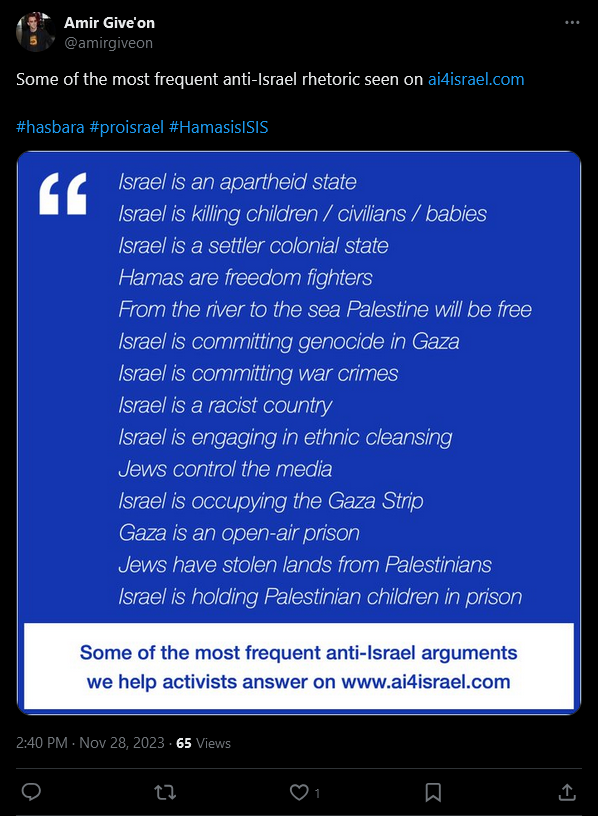

Another platform, AI4Israel, also leverages AI to craft counter-arguments on social media. Founded by Israeli-American data scientist and pro-Israel activist Amir Give’on, the tool is part of a volunteer-based project. In November 2023, Give’on promoted AI4Israel in a LinkedIn article and has since posted about the platform on his X account.

Give’on provided details on how the AI4Israel platform operates, explaining:

It scans incoming claims and checks them against a pre-existing database. If a claim has been encountered before, the tool retrieves a stored response. If it’s a new claim, the tool generates a response using a Retrieval-Augmented Generation (RAG) model, which draws on a vast corpus of data.”

He also emphasized the role of volunteers in enhancing AI4Israel’s effectiveness:

Volunteers prioritize claims based on frequency and refine responses, ensuring relevance and accuracy. They also identify gaps in our knowledge base, constantly enriching it, especially in response to current events.”

The Israeli government’s role

AI has played a central role in Israel’s online influence operations, aimed at swaying public opinion on the war. In May 2024, social media company Meta and ChatGPT’s owner, OpenAI, disclosed that they had banned a network of AI-powered accounts spreading pro-Israel and Islamophobic content. The campaign, orchestrated by Israel’s Ministry of Diaspora Affairs and executed by Israeli political consulting firm Stoic, was designed to influence online discourse.

Naim, a Gaza resident, highlighted the damaging effects of these disinformation efforts.

“Every day, I face comments on Twitter [now X] where I am either accused of lying or my news is questioned, despite providing verified sources,” Naim told MintPress News.

Some individuals…have denied the news of the death of seven of my cousins and family members, falsely claiming that their families are responsible for their sacrifices. This persistent disinformation campaign is a daily challenge as I strive to share accurate information about the atrocities occurring in Gaza.”

In September, Israel joined the first global treaty on AI — an international agreement established to regulate the responsible use of the technology while safeguarding human rights, democracy, and the rule of law. The treaty is in cooperation with the United States, United Kingdom, European Union, and other countries, and Israel’s participation was met with backlash, especially given Israel’s weaponization of AI to carry out its genocidal campaign in Gaza.

Yet Israel’s dangerous AI advancements on the battlefield and online may only intensify. In addition to joining the international treaty, Israel announced the second phase of its Artificial Intelligence Program, which will run until 2027 with an allocated budget of NIS 500 million (approximately $133 million).

For Naim, Israel’s misuse of AI to distort and fabricate information reveals a troubling side of the technology, particularly in its manipulation of public opinion, erosion of trust, and incitement of hate and violence.

As AI’s darker applications make it harder to distinguish fact from fiction, Naim suggests approaching social media content with “a healthy dose of skepticism.”

“Not everything you see online is true, especially if it seems overly sensational or emotional,” Naim cautioned. “Always verify information by searching for the original source, checking its credibility, and looking for confirmation from multiple sources.”

She recommends watching for red flags, like spelling errors and doctored images, and utilizing verification tools, such as reverse image searches and fact-checking websites.

Yet, Naim notes, AI isn’t inherently harmful. “While Israel exploits AI for harmful purposes, we have the opportunity to use AI constructively,” she said. “By leveraging AI, we can amplify and accurately convey the Palestinian narrative, ensuring that the true stories of those affected by the genocide in Gaza are heard across multiple languages and platforms.”

Feature photo |Illustration by MintPress News

Jessica Buxbaum is a Jerusalem-based journalist for MintPress News covering Palestine, Israel, and Syria. Her work has been featured in Middle East Eye, The New Arab and Gulf News.